Two Open Source OLAP Systems in 5 Minutes.

Apache Druid and ClickHouse. Specialized OLAP Solutions for Different Fields.

Hi guys!

I’m Stephen, a data engineer and a data analyst. I'm delighted to welcome you to this article on data analysis. I hope you find it enjoyable and informative!

In the space of data analysis, it's nearly impossible to avoid discussing OLAP systems.

I've always been a fan of BigQuery, known for its distributed computing engine, Dremel. Its user-friendly design makes it both easy to use and fast, suitable for most scenarios—but not all.

Specialized OLAP systems are required for specific situations to achieve optimal performance and meet unique requirements. This realization prompted me to start researching OLAP query engines for different purposes.

In this article, we'll explore two OLAP systems:

Both share common features of general OLAP systems, such as:

Column-based storage.

Designed to analyze huge amounts of data.

However, each has its characteristics, enabling them to handle different situations effectively.

I then read their official docs, papers, and various online resources, learning a lot from them.

As a result, I wrote this article, to help readers understand the features and appropriate use cases of these two OLAP systems in the time it takes to brew a cup of coffee.☕

Let’s dive into them!

Apache Druid

The name Druid comes from the Druid class in many role-playing games: it is a shape-shifter, capable of taking on many different forms to fulfill various different roles in a group.

Back in 2011, the developers of Druid faced several challenges while developing an OLAP system:

Ingesting data and making it immediately readable.

Supporting enough concurrency (1000+ users).

Achieving high query performance.

They built Druid, a real-time analytical data store to solve this issue.

As a result, Druid is a highly specialized database and query engine focused on real-time data analysis. These factors make Apache Druid a data querying system with distinct advantages and disadvantages.

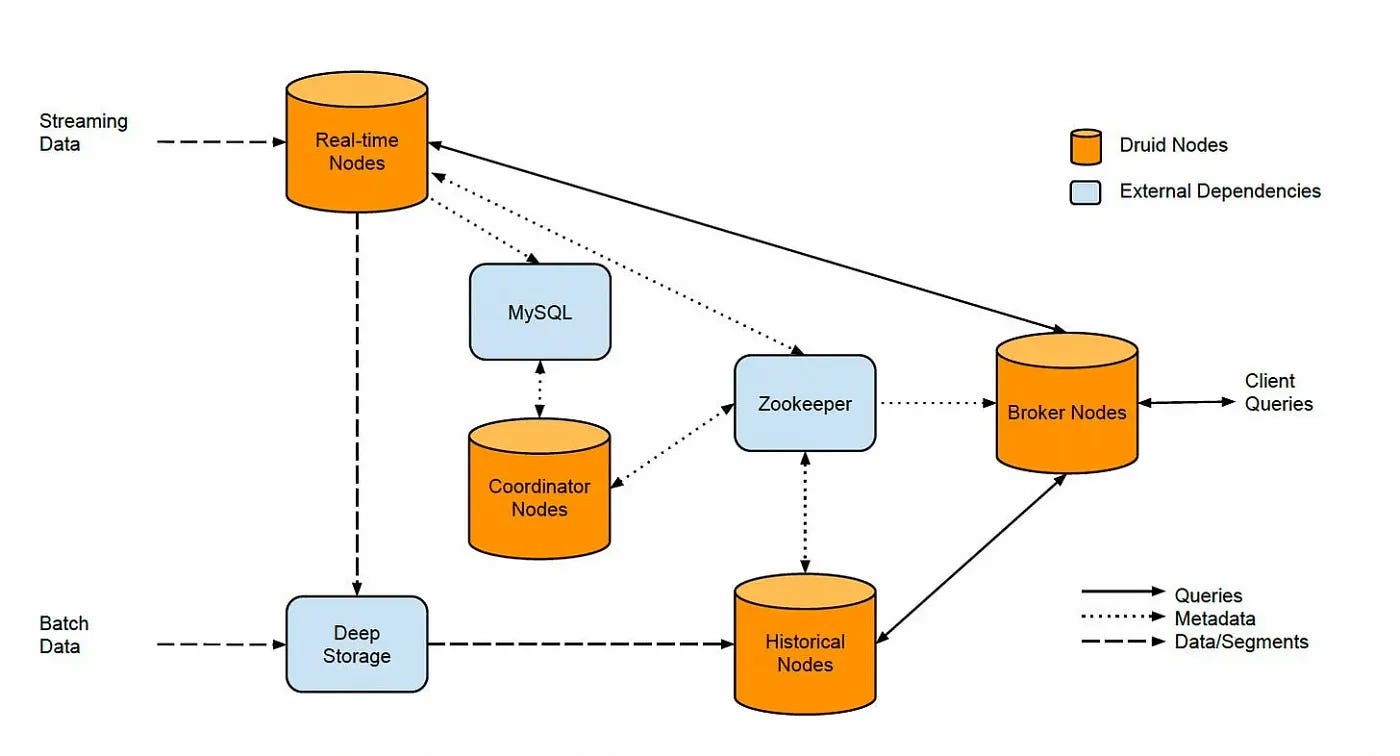

In this structure, different nodes have distinct features. Let's quickly go through them:

Real-time Nodes

Handle streaming data input and can be queried immediately.Deep Storage

A file storage system used for batch data ingestion, such as AWS S3 and GCP GCS.Historical Nodes

These nodes consist of memory and disks, using the mmap (memory-mapping) technique to reduce the query time for existing data.Zookeeper

Used to coordinate the state of different nodes.Coordinator Nodes

To communicate with Historical nodes to load or drop segments based on configurations. Segments are sets of data and indexes.Broker Nodes

They’re used to handle queries from users. They determine which services to forward queries to by first building a view of the system from information in ZooKeeper.MySQL

This database stores metadata, such as the list of all segments.

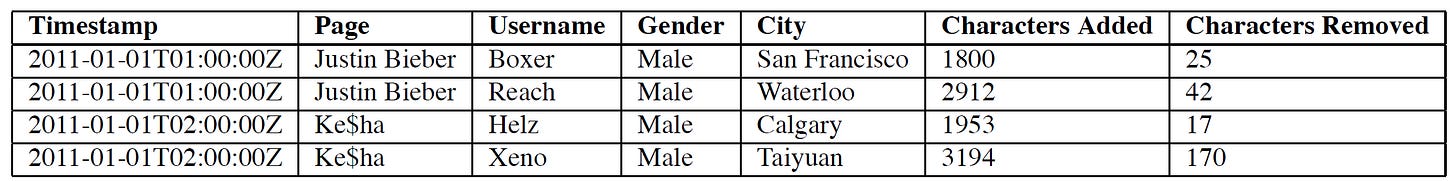

The paper includes an official example to help readers better understand Druid's use cases. According to Table 1, Druid is designed to handle queries such as:

Q1 - How many edits were made on the page Justin Bieber from males in San Francisco?

Websites like Wikipedia face countless users and edit actions. Druid can help the managing team get the results (Q1) in real time.

I think we have the whole picture now.👍 Next, let's take a look at the pros and cons of Druid:

Pros:

Handles huge amounts of streaming data ingestion, and can also deal with batch data ingestion.

Sub-second query latency, even for streaming data.

Highly scalable.

Excels at handling high cardinality data.

Cons:

Poor at handling JOIN operations. (Avoid using JOINs in Druid)

Real-time updates are not allowed.

JVM-based infrastructure has performance limitations compared to query engines built with C/C++. This issue was highlighted in the Databricks paper, which is one of the reasons that led to the development of Photon. The main reasons for these limitations are related to memory management and SIMD(Single Instruction, Multiple Data).

Extensive maintenance work is necessary due to the numerous components and dependencies required. However, there are now cloud-based Druid services that help maintain the infrastructure.

ClickHouse

It’s a combination of “Clickstream” and “Data wareHouse”. It comes from the original use case at Yandex.Metrica, where ClickHouse was supposed to keep records of all clicks by people from all over the Internet, and it still does the job.

by ClickHouse

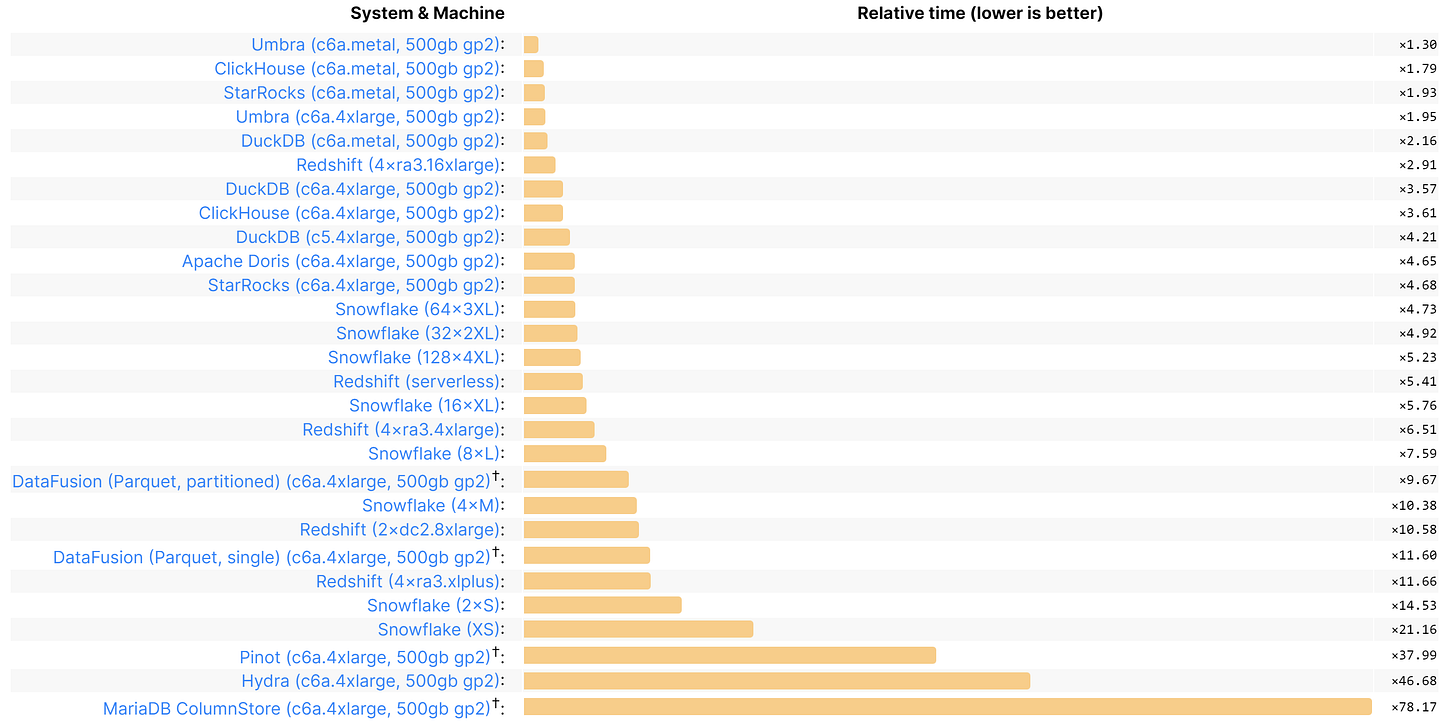

ClickHouse is a renowned OLAP DBMS, well-known for its lightning-fast querying speed. (it even maintains a performance benchmark ranking website!)

It implements various techniques to achieve this exceptional performance:

Specialized Codecs

ClickHouse uses LZ4 by default and employs a two-stage compression process to maximize data compression rates. The first stage involves specialized codecs, and the second stage uses general codecs.

(visit the official explanation and another article — How to speed up LZ4 decompression in ClickHouse? for more information).

These data compression methods offer two key benefits:Reduced Storage Usage: Compressing data effectively reduces the amount of storage needed.

Increased Memory Throughput: Efficient compression speeds up data transfer rates in memory.

Pre-sorting Data on Disk

To handle large volumes of data, ClickHouse uses memory and disk storage during querying. It pre-sorts the primary key on the disk to minimize disk I/O operations, enhancing overall performance.

Parallel and Distributed Processing

ClickHouse leverages parallel processing across multiple cores and distributed processing across multiple servers to achieve high-speed querying. This technique also increases the scalability.

Vectorized Query Execution

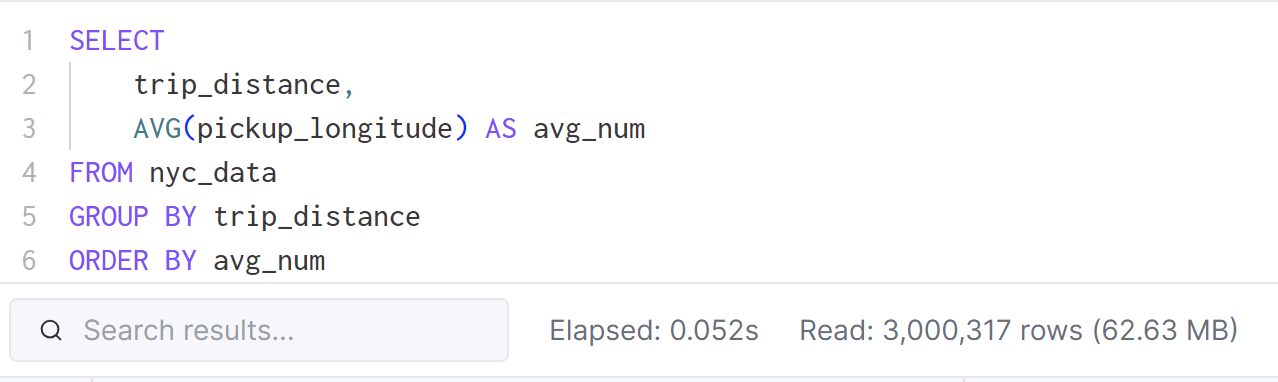

ClickHouse vectorizes columns, enabling the implementation of SIMD techniques to process data sets simultaneously (see Graph 1). This approach improves CPU cache utilization and increases processor throughput.

The points above are just some of ClickHouse’s key features, you can find more details on their website or in these two videos:

Like other OLAP systems, ClickHouse has its own set of pros and cons:

Pros:

Aggregates and analyzes wide tables (tables with many columns) in a very short time.

Handles batch and streaming data ingestion (performs best with batch data ingestion).

Offers a low learning curve, using a SQL-like query language.

Cons:

Performs poorly with JOIN operations, reducing query performance.

Inefficient for point queries that retrieve single rows using their keys.

Supports limited concurrency.

Lacks the ability to modify or delete already inserted data with high rate and low latency.

Summary

In the world of analytical data pipelines, finding an OLAP system that excels in all situations is a significant challenge. Each system has its strengths and weaknesses. The best approach is to understand the advantages and disadvantages of each tool and make an informed choice.

In a future article, I plan to discuss Apache Pinot, another OLAP system initially developed by LinkedIn, capable of handling real-time data needs for hundreds of millions of users. If you are interested, please subscribe to my newsletter—it will encourage me to write more articles like this.

If you have any suggestions or comments, or find any mistakes, feel free to leave a message or contact me.

Thanks for reading.

-Stephen Wen

I'd really appreciate your help in enhancing this publication, let me know any feedback through comments, Linkedin, or email.